You may be used to Mac apps using red underlines to mark misspelled words, but did you know that macOS has also long included a fully featured Dictionary app? It provides quick access to definitions and synonyms in the New Oxford American Dictionary and the Oxford American Writer’s Thesaurus, along with definitions of Apple-specific words like AirDrop and Apple ProRes RAW. But that’s far from all it can do.

Getting on the Same Page

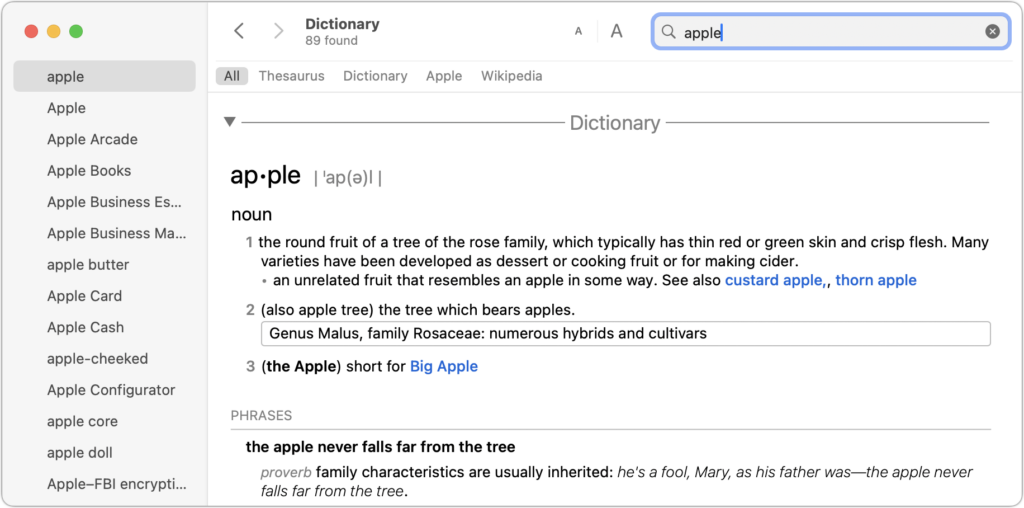

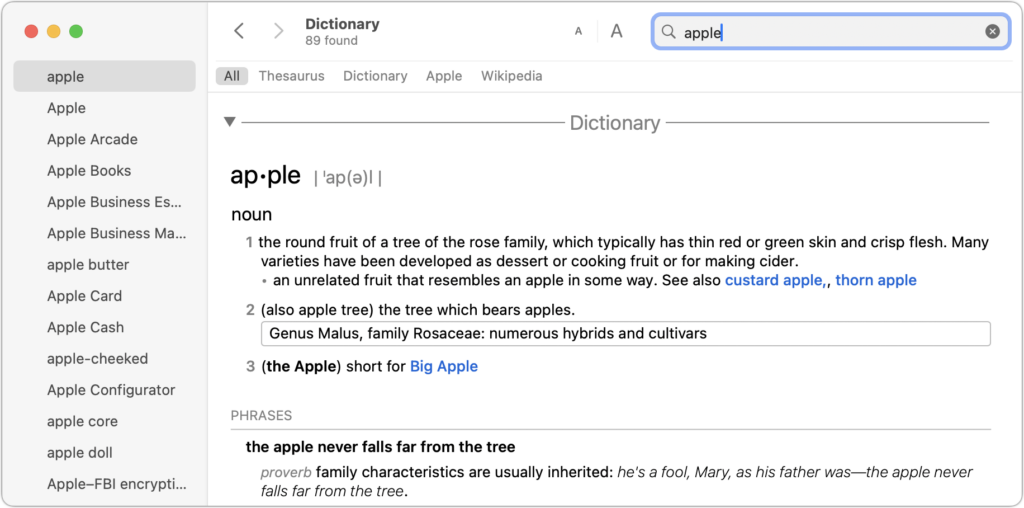

First, some basics. Open the Dictionary app from your Applications folder and type a word or phrase into the Search field. As you type, Dictionary starts looking up words that match what you’ve typed—you don’t even have to press Return. It’s a great way to look up a word when you aren’t quite sure of the complete spelling. If more than one word matches what you’ve typed, click the desired word in the sidebar.

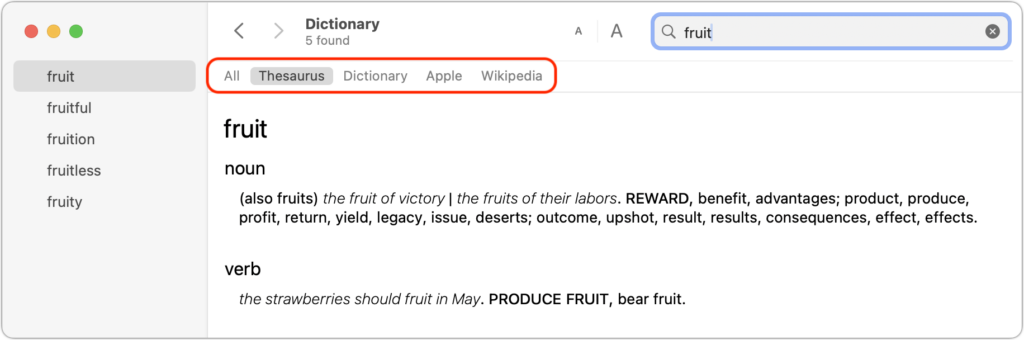

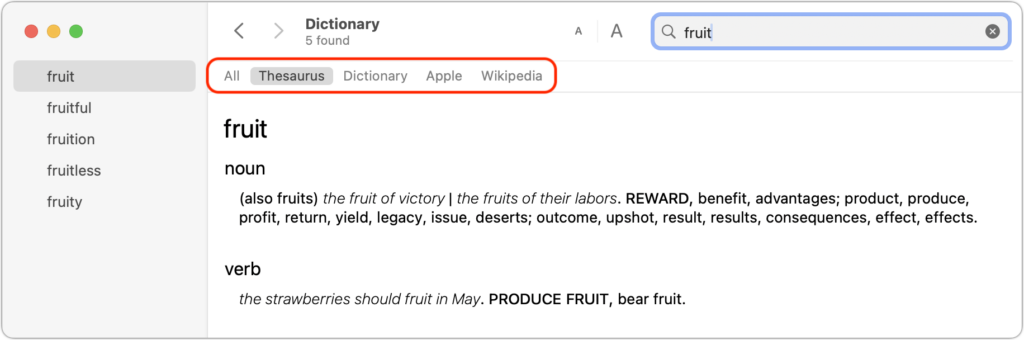

Notice the gray buttons below the toolbar, which represent the references Dictionary will consult for every search, including Wikipedia if your Mac has an Internet connection. In short, Dictionary gives you instant access to a dictionary, a thesaurus, and an encyclopedia containing over 6.8 million articles in English. Click a reference to limit your search to that source, or click All to scan all of them.

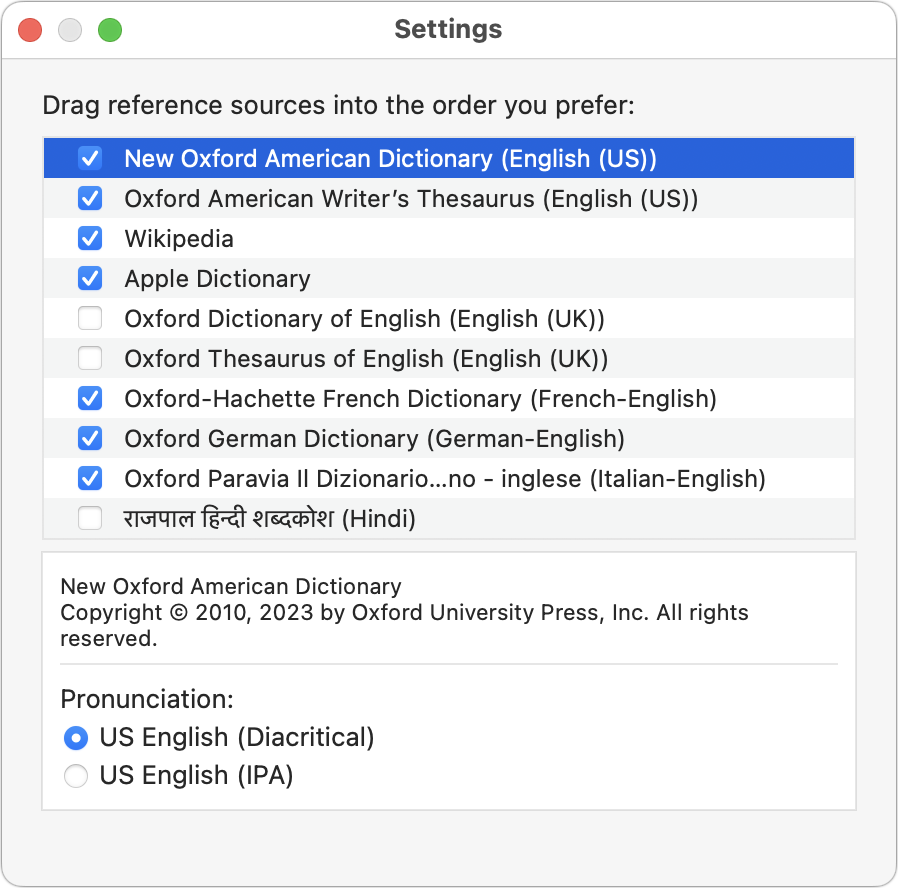

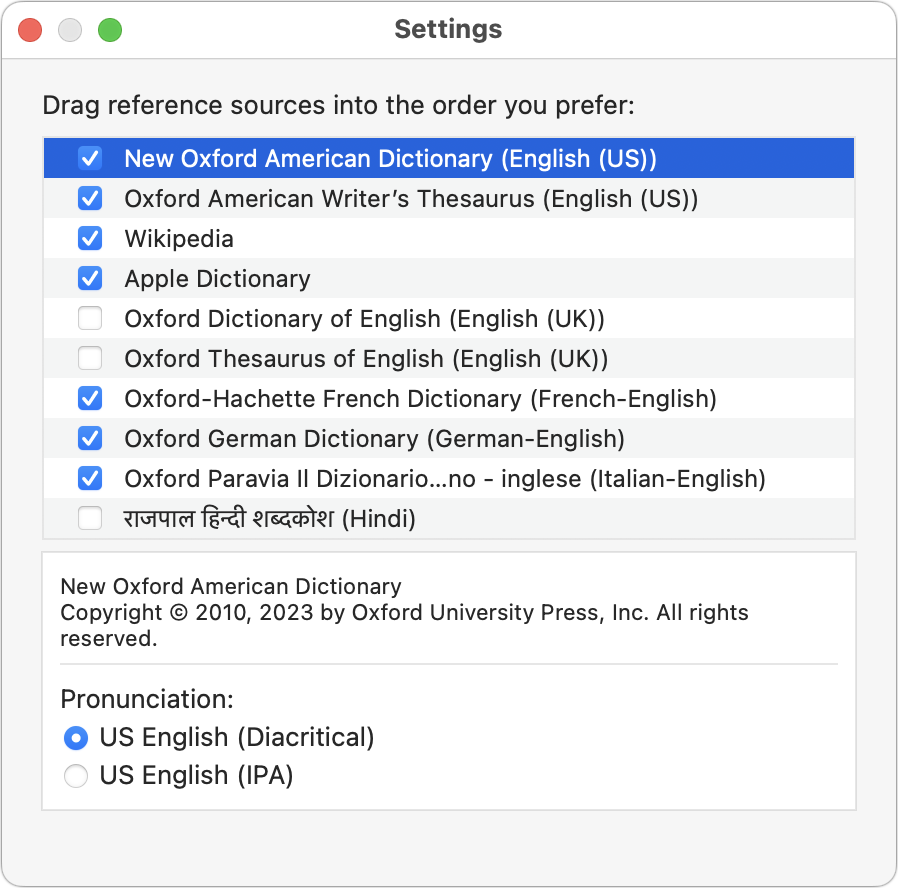

If you want to look up words in another language and get an English definition, Dictionary even provides translation dictionaries alongside a long list of other reference works. Choose Dictionary > Settings and select the ones you’d like to use. Then, drag the selected entries into the order you want them to appear below the toolbar.

Once you’re in a definition, note that you can copy formatted text for use in other apps—always helpful when wading into grammar and usage arguments on the Internet. More generally, you can click nearly any word in Dictionary’s main pane to look it up instantly. If dictionaries had been this much fun in school, we’d all have larger vocabularies! Use the Back and Forward arrow buttons to navigate among your recently looked-up words.

Alternative Lookup Methods

As helpful as the Dictionary app is, you probably don’t want to leave it open all the time. Happily, Apple has provided several shortcuts for looking up words:

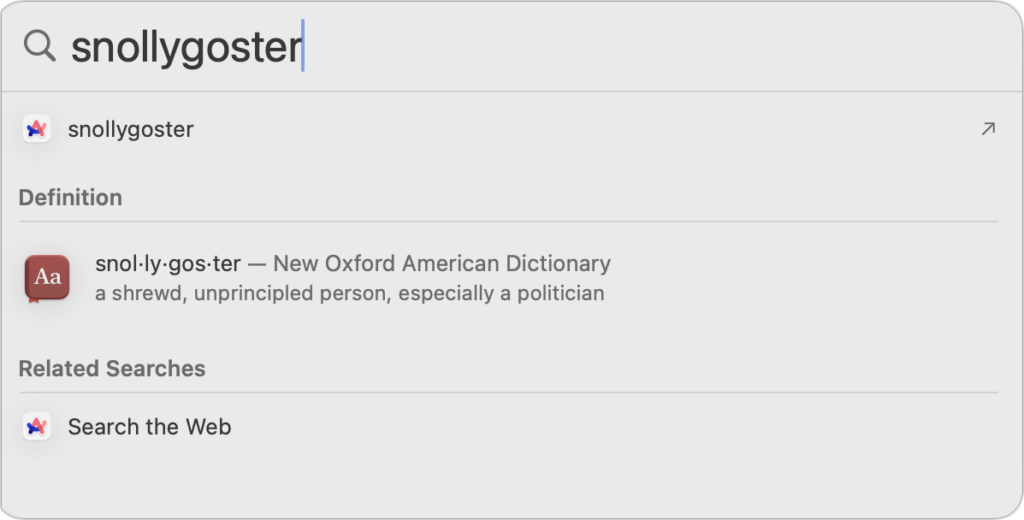

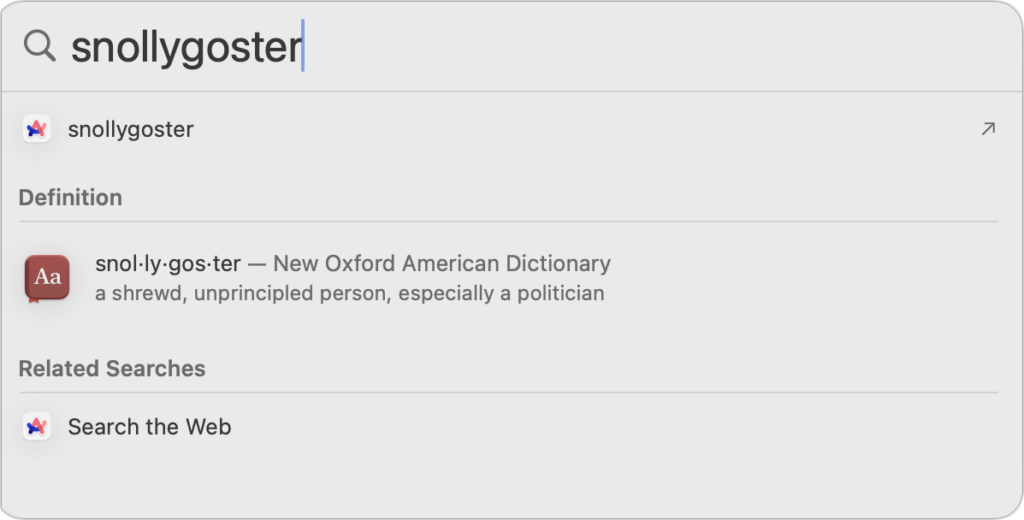

- Spotlight: Press Command-Space to invoke Spotlight, and enter your search term. If you get too many unhelpful results from Spotlight, deselect unnecessary categories from System Settings > Siri & Spotlight.

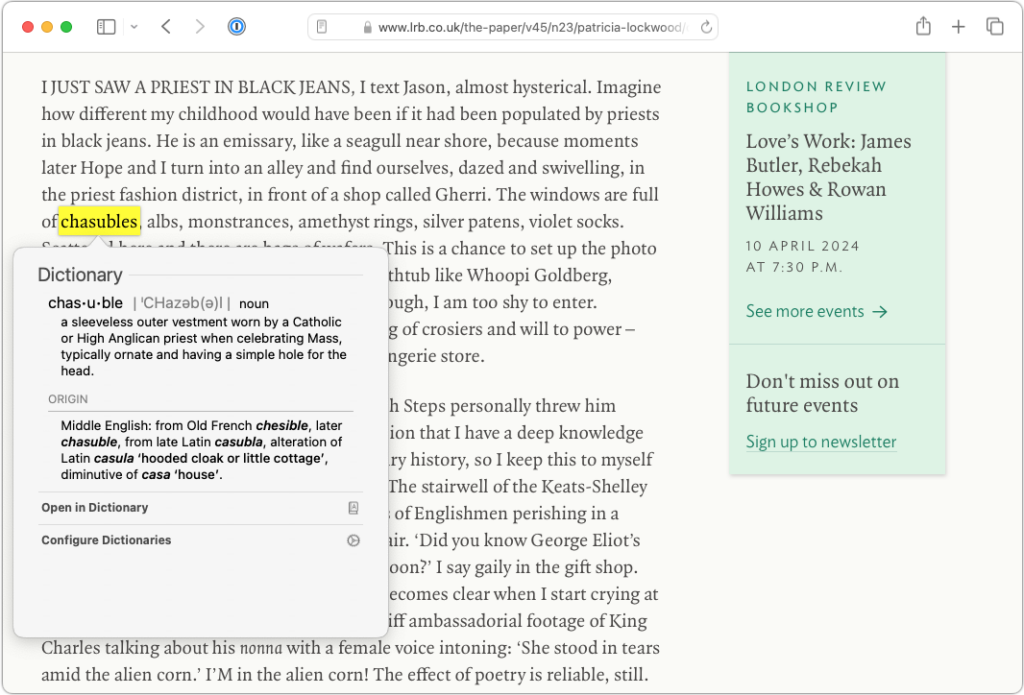

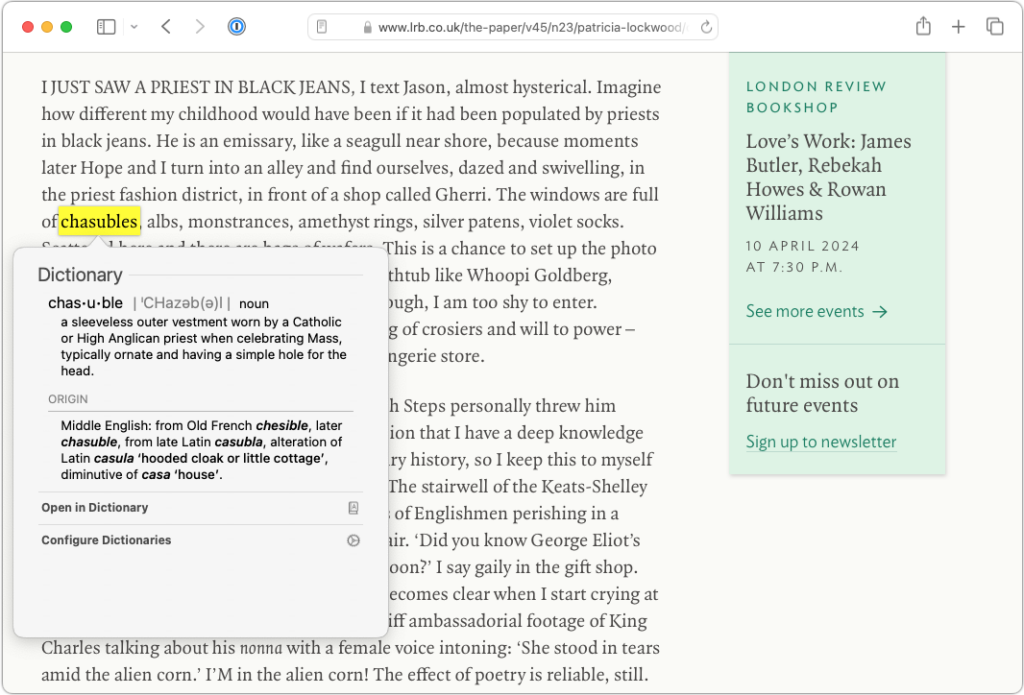

- Lookup: Even better, hover over a word or phrase with the pointer and press Command-Control-D—you can also Control-click the word and choose Look Up “word.” If the app supports it, macOS displays a popover with the definition. If you use a trackpad, you can also do a three-finger tap on the selected word—make sure the “Look up & data detectors” checkbox is selected in System Settings > Trackpad > Point & Click.

Now that you know how to take full advantage of the reference works Apple has built into macOS, it’s time to get in touch with your inner logophile—look it up.

(Featured image by iStock.com/Chinnapong)